Age Verification is Incompatible with the Internet

The recent move among U.S. states to add digital "age verification" to websites which serve adult content is a troubling one. Digital age verification systems are rife with privacy concerns, many requiring you to upload your government ID to third-party vendors or even directly to the website in question. Privacy advocates and companies within the industry have correctly identified all sorts of problems with the idea of tying your personal identity to your browsing activity.

For one thing, assigning this responsibility to website operators normalizes uploading Personally Identifying Information (PII) to every site which requires verification. This is the opposite of privacy-by-design, and will only have the effect of making the internet a much less private, more dangerous place. A system where you have to transmit your PII online at all also creates a substantial risk of identity theft. We live in a world where even major governments regularly fail to secure the digital identities of their citizens, and yet these lawmakers expect every random adult website on the planet to adequately secure the IDs of their users. There is perhaps no other industry I would entrust with my personal information less, so this idea is ridiculous.

The Regulations Aren't Working, yet

For the most part, adult sites are currently not giving in, and rightly so. Most recently, Aylo (the company which owns sites like Pornhub, Redtube, and Youporn) blocked residents in Montana and North Carolina from accessing their site entirely. For companies like Aylo, the prospect of having to collect and securely manage PII from all their users is just as distasteful as you'd feel giving that PII to them. This isn't because they want underaged visitors by any means, but because they don't wish to deal with those security concerns either, and have correctly observed that the prospect of enforcing this on the internet at all is completely impossible.

The reality of the internet is that it is worldwide. Unduly regulating the companies like Aylo which you can control will only serve to promote the companies you can't. No consumer in Montana, Utah, Mississippi, or any of these other states will submit to identifying themselves to the websites they visit when they can just as easily find the same content—or worse!—on some unregulated site hosted in Eastern Europe or wherever else.

Adult content companies pulling out of markets which require age verification entirely seems to be having the intended effect, albeit slowly. The Republican state senator in Utah which sponsored that state's age verification law told Ars Technica that he "did not expect adult porn sites to be blocked in Utah" (in an incredible display of the average politician's lack of forward-thinking skills), and other efforts to overturn similar laws that have passed in other states are already underway in many cases.

Unfortunately, this is not a U.S.-specific problem by any means. Last month, EU regulators determined that the strictest requirements under the Digital Services Act will apply to Pornhub, as well as Xvideos and Stripchat. Designation of these services as "very large online platforms" will obligate them to add digital age verification to their sites, among other measures. Age verification being the solution to "child internet safety" is the growing consensus among Western lawmakers worldwide, and yet it's currently flying under the radar of many privacy conscious people.

The "Private" Solution

There is an age verification solution being touted as the private option. Aylo and some security experts are proponents of so-called "device-based age verification." This is a system where your age will be independently verified by your phone's operating system (through its digital wallet or some similar means). Your phone would then share that verification status with websites, instead of making each site perform that verification independently.

Doing this in a "zero-knowledge" fashion is not impossible. It would be relatively easy to devise a solution in which your phone verifies your age with your local government, and acts as a middleman to share a de-identified verification with the sites you visit. The website would only have the knowledge that your age was verified, without having to receive a plethora of other information such as your exact age, name, address, etc. Your government would receive no knowledge about what websites you visit either, only that your digital ID was linked to some device. On the surface, this all sounds well and good, right?

Of course, the likelihood of governments opting for a zero-knowledge system—instead of something like a centralized age-verification server under their control which gets pinged every time you visit a website—feels fairly low. We saw this with the abysmal "COVID-tracing" rollout, in which many governments opted to roll out their own contact tracing system apps which were far more invasive than the zero-knowledge proof solutions devised by Apple and Google for their devices. However, even in a perfect world the proposed device-based solution is incredibly problematic.

DRM, but Worse

Beyond the issues of creating a digital ID in the first place, the device-based solution opens up an entirely new problem which will have a devastating effect on the computing industry overall. Such a solution would make Big Tech companies like Apple, Google, and Microsoft the gatekeepers of the internet once and for all. Competitors, particularly open-source ones, will have to integrate their products with the proprietary verification solutions put in place by these companies to achieve the same functionality which everybody browsing the internet enjoys today.

No matter which way you slice it, a device-based solution to this problem would be the ultimate DRM. Not serving to "protect" copyrighted content from users in the traditional sense, but to "protect" users from content deemed inappropriate, creating a digital walled garden in which only the big players have the government approval and integration with browsers and websites to participate.

Like traditional DRM, this could have effects on open-source, user-centric software ranging from problematic to downright devastating. Even today our most privacy-focused organizations like Mozilla are forced to acquiesce to running proprietary solutions that check in with Big Tech companies to access content on Netflix, Hulu, YouTube, and other large streaming platforms. Fully Free-and-Open-Source Software (FOSS) is unable to access this content at all! The divide between competitors will only increase with age verification which is woven into the very fabric of the operating systems we use. We will be creating a situation where developers have to ask for permission from their powerful competitors to build a better product, and as a result the choices available to you as a user will dwindle down to few, unless you are one of the few willing to give up basic functionality for freer experiences.

Beyond Adult Content

Once a system is made, companies and governments will use it, often for purposes far outside the original scope of the tool. Conservative and religious groups will push for age verification to apply to more mundane resources, such as those related to sexual education or mental health. Oppressive regimes will have a way to easily restrict online resources which might be vital to young activists or marginalized communities (whom may not have easy access to a digital ID in the first place), which is enforced by the operating system of your own device itself.

Once a digital ID system with web-facing checks like this is in place, it can easily be expanded. Governments can begin to demand sites require verification of details other than age, because the system is already built. Streaming services might begin to verify your location based on the address on your digital ID, locking people out of using a VPN to bypass geo-restrictions for example. Big Tech companies like Apple might come out and say they'd totally never build systems which go beyond simple age verification, to score some privacy points among their customers. Relying on the benevolent promises of these corporations to police government overreach doesn't strike me as better than relying on the government's promises themselves. Not building these invasive services in the first place is the only way to ensure this doesn't happen.

All of this is not purely speculation, but behavior we see in other countries which have already implemented censorship on internet content, which often began with increased restrictions on pornography before expanding heavily. This is not restricted to "third-world" or less developed countries either. South Korea can act as an example of what the future of the internet in the United States might look like if certain people get their way. South Korea is one of the few developed nations where pornography is largely illegal, and internet censorship is hugely prevalent far beyond even that scope. Despite the obvious free speech implications, politicians in the United States and other western countries are increasingly looking towards systems like this as a model to further consolidate their power over their citizens.

We are rapidly building a future in which the devices we own actively work against us, and have frightening control over our access to content which powerful stakeholders might deem unsuitable. It doesn't have to be this way. Privacy advocates have already been successful at blocking measures which supposedly protect children by taking away freedom from adults, and we can't stop now.

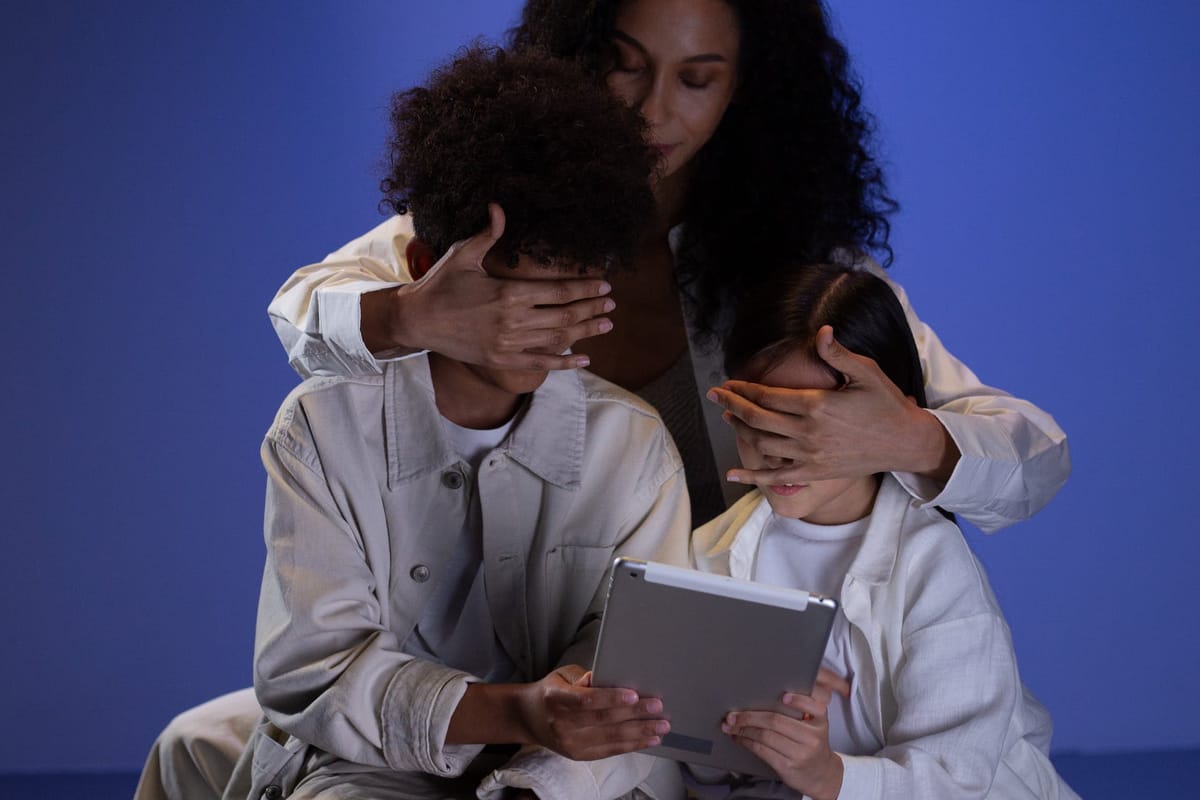

Device-based age verification is not a solution, because age verification in general is not the solution to children accessing inappropriate content in the first place. Instead of continuing to delegate parental responsibilities to the government, perhaps the vocal minority of parents who wish to do so should take the time to educate themselves on technology, educate their children on what is and isn't appropriate, and bear some responsibility themselves. We'll all be better off.